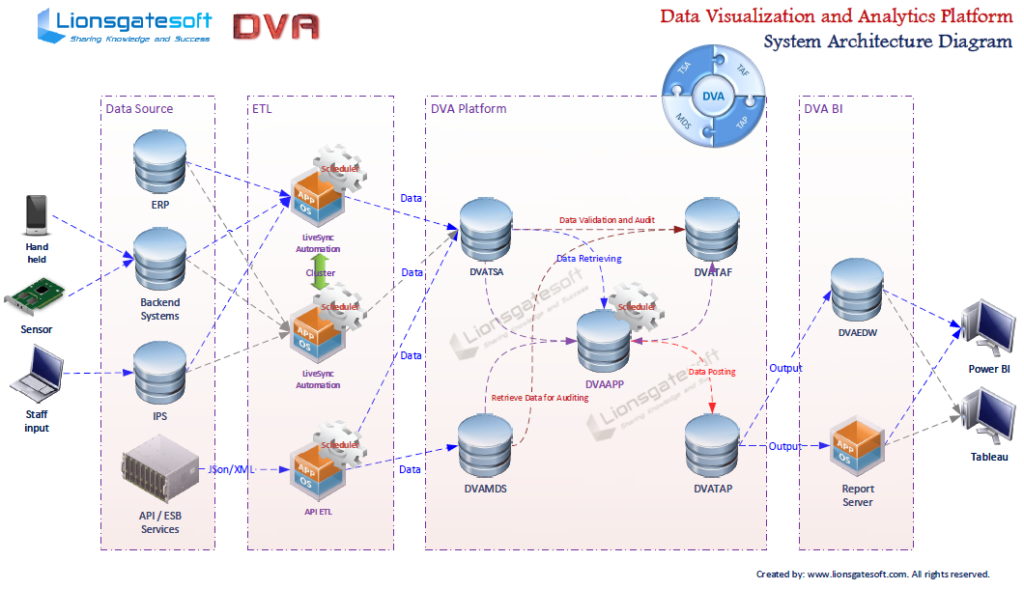

One of my municipality clients has a requirement to handle batch data from multiple data sources for visualization and analytics needs. The data source can be ERP system, sensors or internal databases. Sensors load data into time series database continuously. It requires BI dashboard show charts based on latest data in near real-time manner.

Let’s see how we design the architecture to satisfy the requirements.

First, we have data sources listed in the left, including ERP, time series database-based backend systems, other databases and data flow from APIs ;

Second, we have ETL process to load data from data sources to DVA platform. You may choose any ETL tool you like, but we highly recommend Lionsgate Software’s LiveSync Automation here. It’s a 24*7 non-stop data replication tool which features cross-platform, high availability, high performance and dynamic deployment. As a cluster application, LiveSync Automation can be deployed to multiple application server nodes such as JBoss or WildFly, if one node fails, another node kicks in to perform data replication jobs continuously. LiveSync Automation has embedded scheduler to trigger pre-defined data replication jobs.

Third, let’s see how we design the data platform to handle the batch data from ETL. DVA Platform Core Component:

- DVAAPP: DVA Application. All data processing logic, data flow management, will be stored in DVAAPP schema;

- DVATSA: Transaction Staging Area, contains transaction data loaded from data sources by ETL tool, e.g., LiveSync Automation;

- DVAMDS: Master Data Store, contains master data loaded from master databases by ETL tool;

- DVATAF: Transaction Auditing Framework. A database-driven schema to utilize auditing rules based on DVAMDS to screening and filtering data in DVATSA;

- DVATAP: Transaction Activity Posting. Streaming data is loaded into DVATAP schema after auditing.

Fourth, a scheduler is going to call stored procedures in DVAAPP to run through business rules defined in DVATAF for data standardization, data quality, data cleansing, data validation and data auditing, and load data into DVATAP.

Last, DVATAP data can be loaded into DVAEDW (Enterprise Data Warehouse) or other downstream systems. MS Power BI or Tableau may load data from DVAEDW for data visualization and analytics.

Please note ETL tool LiveSync Automation uses embedded scheduler to trigger pre-defined data replication jobs. This batch data processing architecture also relies on scheduler. This solution provides data refreshing based on the intervals of schedulers.

Lionsgate Software consultants have decades of experience designing complex data platform, architecture and database-driven solutions. Should you have any questions, please contact us.